The metrics:

- Evaluation tool: BOPtoolkit

- For 2D object detection: precision, recall, IoU, mAP

- For 6D pose estimation: ADD, ADD, COU, VSD

The first evaluation step consists of computing 2D bounding box detection metrics with the mean Average Precision (mAP) based on the Intersection over Union (IOU) scores, with a threshold of 0.5. In 6D pose estimation the most widely used metric is Average Distance to the model point (ADD) error, (eADD). If the model M has indistinguishable views, the error is calculated as the Average Distance to the Closest model point (ADI). Each estimated pose is considered correct if e < θ = k * d where k is a constant and d is the object diameter. We considered different costant k equal to 0.1, 0.2 and 0.3. In addition, we also computed Complement over Union (CoU) error recalls. This is a metric based on masks: it creates masks of the given rendered object (given the cad model and the estimated pose). Then it compares predicted and ground truth masks. CoU was computed with three threshold: 0.3, 0.5, 0.7.

| CoU | θ = 0.3 | θ = 0.5 | θ = 0.7 |

|---|---|---|---|

| Box | 94.6% | 100.0% | 100.0% |

| Cup | 94.2% | 99.4% | 100.0% |

| Jug | 79.6% | 97.8% | 99.6% |

| Hotstab | 20.2% | 65.6% | 92.6% |

| Average | 72.15% | 90.57% | 98.05% |

| ADI | k = 0.1 | k = 0.2 | k = 0.3 |

|---|---|---|---|

| Box | 21.6% | 51.0% | 60.4% |

| Cup | 55.0% | 77.8% | 85.8% |

| Jug | 72.2% | 86.6% | 93.0% |

| Hotstab | 44.0% | 64.4% | 73.8% |

| Average | 48.2% | 69.95% | 78.25% |

The method comparison:

- YOLOv4 + AAE

- EfficientPose

- Yolo6D

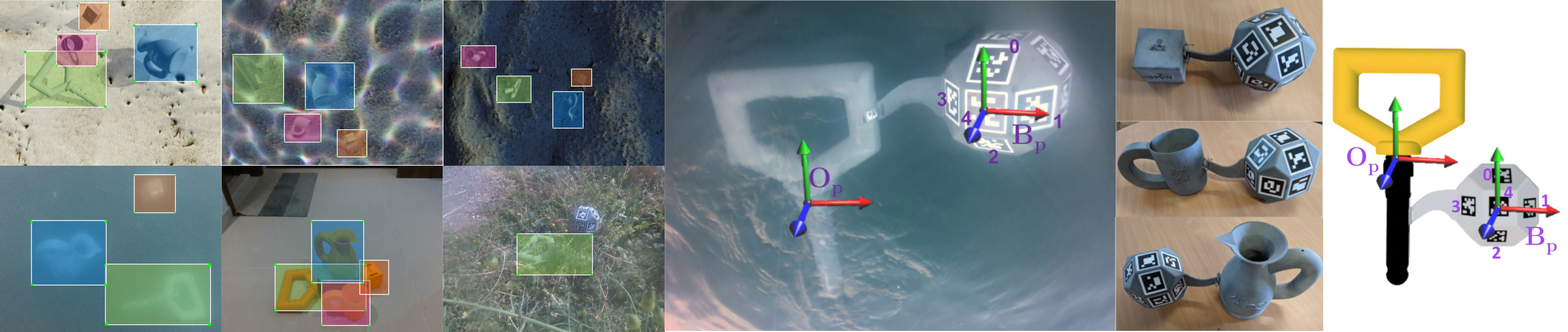

Despite our dataset being mostly composed of symmetrical objects, we achieved better results when comparing with similar state-of-the-art methodologies. The following Table for the hotstab and the jug compared three different methods in terms of ADD, ADI and mAP. In particular, we chose for comparison YOLO-6D [1], which is a feature-based approach that uses Perspective’n’Point (PnP) algorithm, and EfficientPose [2], a full-frame, single-shot method which achieves one of the higher results on LineMod Dataset. YOLO-6D and EfficientPose were trained with different input and batch sizes, different learning rates and Adam momentum as optimizer. On the other hand, for EfficientPose the best performance is achieved with a batch size equal to 1, a learning rate of 0.0001 and 500 epochs. To ensure comparability with the other two methods, we opted for lighter versions by selecting φ equal to 0 as scaling hyperparameter. Despite the higher results on LineMod Dataset, EfficientPose achieves very low performance scores. On the other hand, YOLO-6D achieves higher performances with respect to EfficientPose, but it does not reach our pipeline percentage scores.

| Hotstab | ADD | ADI | mAP (θ=0.5) |

|---|---|---|---|

| EfficientPose | 1.32% | 8.65% | 0.7521 |

| Yolo6D | 9.58% | 36.71% | 0.77 |

| YOLOv4+AAE | 11.4% | 44.0% | 0.99% |

| Jug | ADD | ADI | mAP (θ=0.5) |

|---|---|---|---|

| EfficientPose | 23% | 54.8% | 0.7341 |

| Yolo6D | 26.50% | 58.44% | 0.816 |

| YOLOv4+AAE | 29.2% | 78.2% | 0.995% |

[2] Bukschat, Yannick, and Marcus Vetter. "EfficientPose: An efficient, accurate and scalable end-to-end 6D multi object pose estimation approach." arXiv preprint arXiv:2011.04307 (2020).